We can't find the internet

Attempting to reconnect

Something went wrong!

Hang in there while we get back on track

Wed, July 10, 2024

Base image testing with Icepak v1 and Polar v0.2

When we launched the MVP of our image builder and server we had to get it done fast since there was a deadline of launching before May 2024. However given that we had to get the MVP out the door there were many things we wanted to do which we had to do later. One of those things is implementing automated image testing.

We discovered that not all images are built equal. There were cases where built images networking would fail or some basic apps would not work for example in alpine/edge images sometimes things would break though it's understandable given that it's the 'edge' version. We needed a process to make sure that images we ship into production are not broken and work out of the box to ensure the reliability of our platform.

Polar Image Server v0.2 upgrades

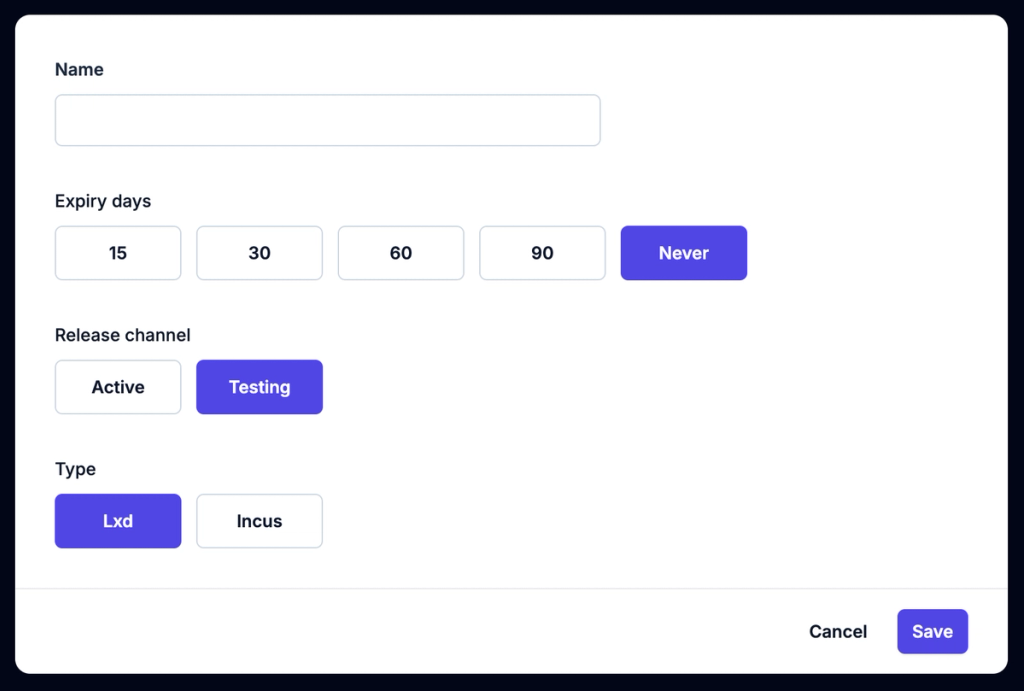

Our Polar server received some upgrades that enable us to move images into 'testing' before we made them 'active'. This also meant we would need to implement the ability to choose which 'release channel' a given space would follow.

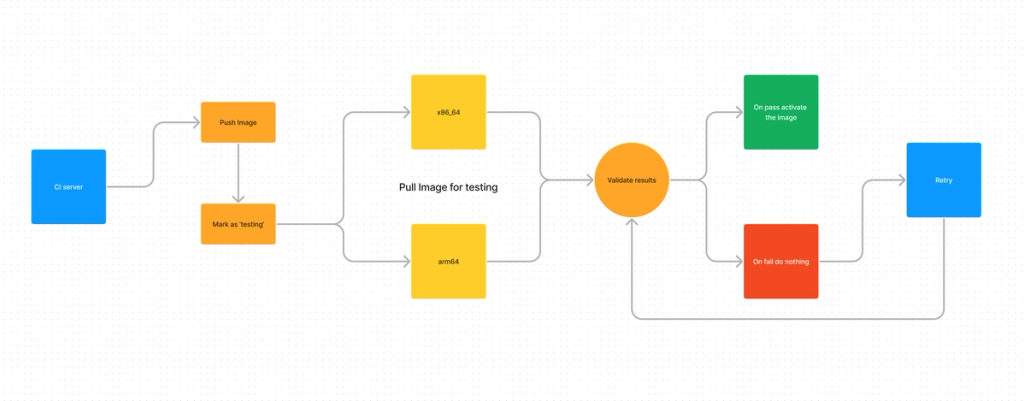

We wanted the testing environment to exactly mimic the full production environment which means with this feature we would be able to do full integration testing on the images. What this means is we don't test the built image on the CI server. We run the tests in a real LXD environment, pulling the images from our image server's 'testing' channel. We have 2 bare metal machine setup. One is a x86_64 server and one is simply a raspberrypi for testing arm64 images.

The architecture of our testing environment looks like the following:

With this workflow we are able to validate our images and ensure we don't ship anything broken into production.

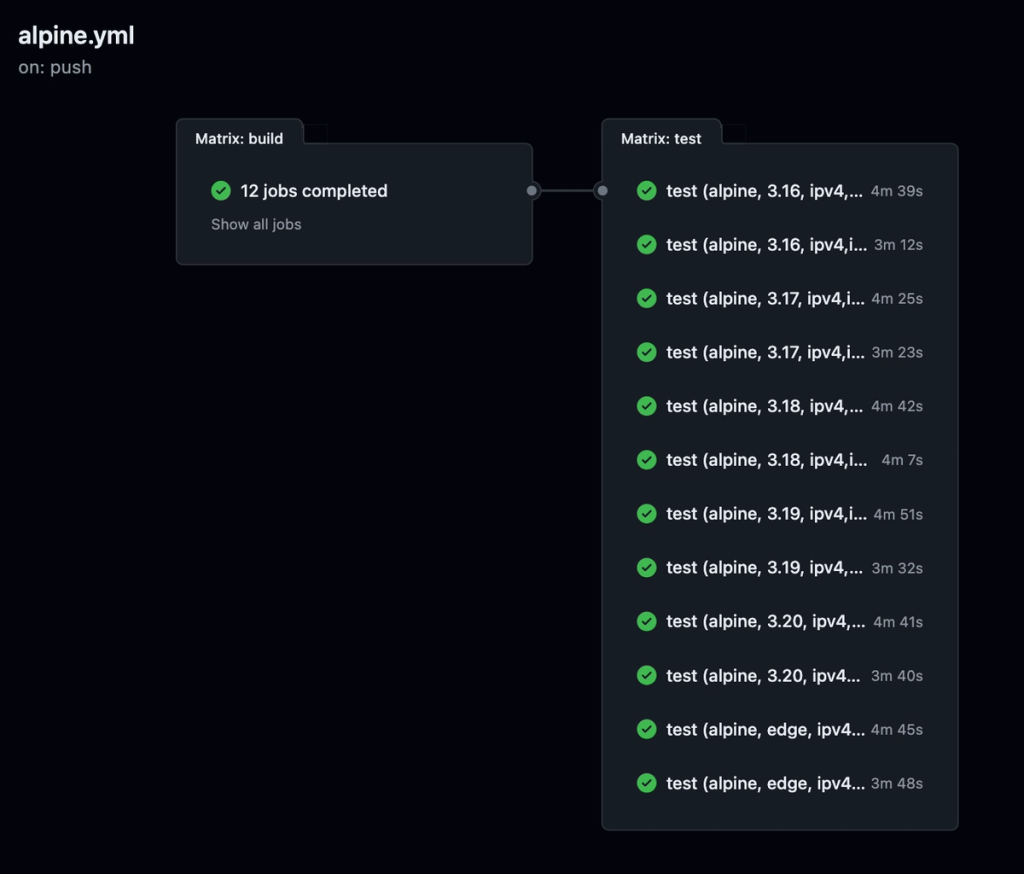

Upgrades to Icepak

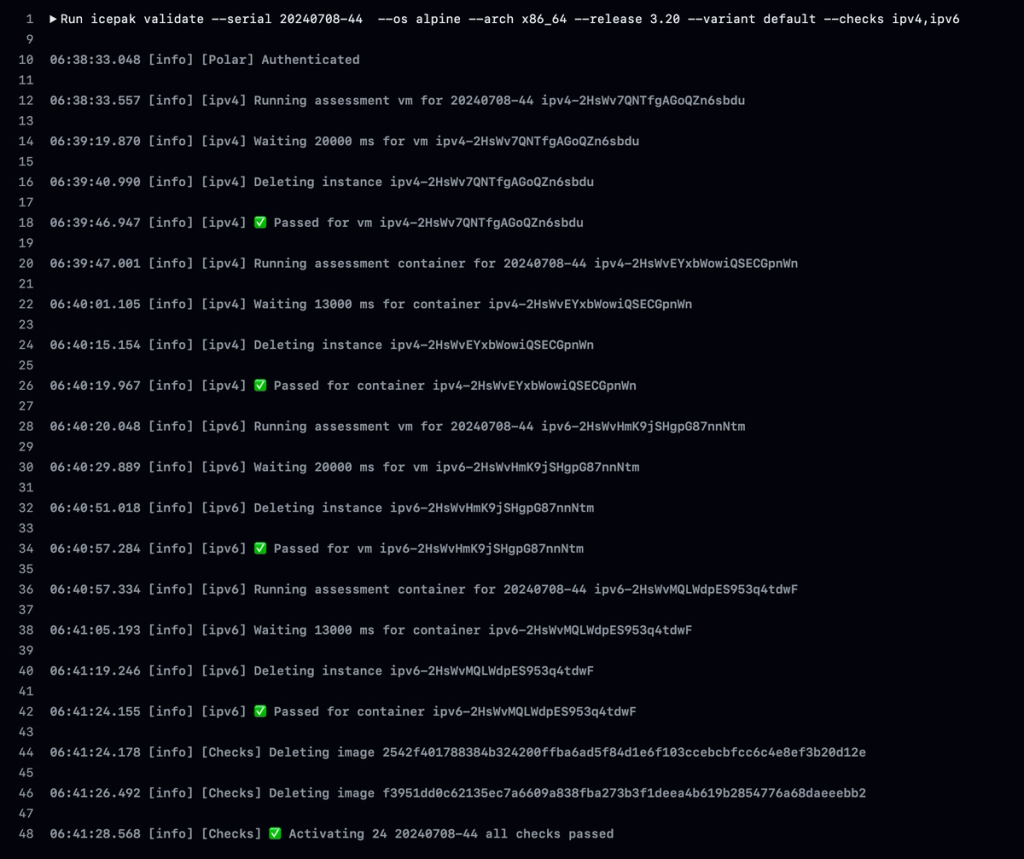

Icepak is the github action that builds the images, it also received significant upgrades that integrate with the all the new changes in Polar. The actual code for testing is actually implemented in Icepak. Icepak then tracks results of the validation process using Polar. That way we can also track tests that have already run and passed so that on retry they don't need to run again.

If you are interested in seeing this in action you can take a look here:

Lastly on the polar server we also implemented a pruning mechanism to deactivate any images from the 'testing' bucket that never passed the tests within 3 days. This ensures that our testing release channel is clean and only contains images relevant for testing.

Future Plans

The reason we designed things this way is because it opens up many possibilities, in the future we will have pre built images for CUDA / ROCm and with this architecture we are not limited by resources on the CI server. We can actually test our images on the LXD server that has all the resources necessary, for example we can run tests to ensure that the CUDA runtime works with the GPU and that nvidia-smi is outputting the expected result.

Wrap up

All the work that was done for enabling this testing mechanism is open-sourced you are most welcome to give us a star to show your support.

- Polar Image Server - https://github.com/upmaru/polar

- Icepak - https://github.com/upmaru/icepak

- Image Server - https://images.opsmaru.com